Speed Comparison:

Fortran vs Julia vs Python

(Runge-Kutta Method)

This page compares the computational speeds of Fortran, Python, and Julia. It is often said that Python is slow or that Julia is as fast as Fortran, but when comparing the computational speeds between languages, the comparisons are sometimes made using highly optimized libraries or by only optimizing the code written in a specific language, which may not always lead to meaningful comparisons for many users. Therefore, this time, we will compare the computational speeds of Fortran, Python, and Julia by solving the relatively simple problem of Newton's equation of motion for a one-dimensional harmonic oscillator using the Runge-Kutta method. To fairly compare the computational speeds of different languages, we will use simple code that does not undergo particular optimization (assuming the kind of code that an average student who has just started learning numerical calculation in a university course would write).

1. Problem Setting: Newton's Equation of Motion for a One-Dimensional Harmonic Oscillator

To compare the computational speeds of Fortran, Python, and Julia, we consider solving Newton's equation for a one-dimensional harmonic oscillator. The motion equation to be solved is,

To numerically solve Newton's equation, we consider rewriting the second-order ordinary differential equation into the following first-order differential equations.

2. Codes for Fortran, Python, Julia

To compare the computational speeds of Fortran, Python, and Julia, we solve the system of differential equations, equations (2) and (3), using the 4th order Runge-Kutta method. The codes written in each language are shown below. Please refer to the details of the computations in each code. As a rough outline of the code, the time step dt is set to 0.01, and the time evolution calculation is carried out for 108 steps. Additionally, the information of the position

Fortran Code

program main implicit none real(8) :: x, v real(8),allocatable :: xt(:), vt(:) real(8) :: mass, k, dt integer :: it, nt mass = 1d0 k = 1d0 dt = 1d-2 nt = 100000000 allocate(xt(0:nt), vt(0:nt)) x = 0d0 v = 1d0 do it = 0, nt xt(it) = x vt(it) = v call Runge_Kutta_4th(x,v,dt,mass,k) end do open(20,file="result_fortran.out") do it = nt-1000, nt write(20,"(3e26.16e3)")it*dt, xt(it), vt(it) end do close(20) contains subroutine Runge_Kutta_4th(x,v,dt,mass,k) implicit none real(8),intent(inout) :: x, v real(8),intent(in) :: dt, mass, k real(8) :: x1,x2,x3,x4,v1,v2,v3,v4 ! RK1 x1 = v v1 = force(x, mass, k) ! RK2 x2 = v+0.5d0*dt*v1 v2 = force(x+0.5d0*x1*dt, mass, k) ! RK3 x3 = v+0.5d0*dt*v2 v3 = force(x+0.5d0*x2*dt, mass, k) ! RK4 x4 = v+dt*v3 v4 = force(x+x3*dt, mass, k) x = x + (x1+2d0*x2+2d0*x3+x4)*dt/6d0 v = v + (v1+2d0*v2+2d0*v3+v4)*dt/6d0 end subroutine Runge_Kutta_4th real(8) function force(x,mass,k) implicit none real(8),intent(in) :: x, mass, k force = -x*k/mass end function force end program main

Python code

import numpy as np def Runge_Kutta_4th(x, v, dt, mass, k): # RK1 x1 = v v1 = force(x, mass, k) # RK2 x2 = v + 0.5 * dt * v1 v2 = force(x + 0.5 * x1 * dt, mass, k) # RK3 x3 = v + 0.5 * dt * v2 v3 = force(x + 0.5 * x2 * dt, mass, k) # RK4 x4 = v + dt * v3 v4 = force(x + x3 * dt, mass, k) x = x + (x1 + 2 * x2 + 2 * x3 + x4) * dt / 6 v = v + (v1 + 2 * v2 + 2 * v3 + v4) * dt / 6 return x, v def force(x, mass, k): return -x * k / mass def main(): mass = 1.0 k = 1.0 dt = 1e-2 nt = 100000000 xt = np.zeros(nt + 1) vt = np.zeros(nt + 1) x = 0.0 v = 1.0 for it in range(nt + 1): xt[it] = x vt[it] = v x, v = Runge_Kutta_4th(x, v, dt, mass, k) with open("result_python.out", "w") as f: for it in range(nt - 1000, nt + 1): f.write(f"{it * dt:.16e}\t{xt[it]:.16e}\t{vt[it]:.16e}\n") if __name__ == "__main__": main()

Julia code

function main() mass = 1.0 k = 1.0 dt = 1e-2 nt = 100000000 xt = zeros(Float64, nt+1) vt = zeros(Float64, nt+1) x = 0.0 v = 1.0 for it = 1:nt+1 xt[it] = x vt[it] = v x, v = Runge_Kutta_4th!(x, v, dt, mass, k) end open("result_julia.out", "w") do file for it = nt-999:nt println(file, "$(it*dt) $(xt[it]) $(vt[it])") end end end function Runge_Kutta_4th!(x, v, dt, mass, k) x1 = v v1 = force(x, mass, k) x2 = v + 0.5 * dt * v1 v2 = force(x + 0.5 * x1 * dt, mass, k) x3 = v + 0.5 * dt * v2 v3 = force(x + 0.5 * x2 * dt, mass, k) x4 = v + dt * v3 v4 = force(x + x3 * dt, mass, k) x += (x1 + 2 * x2 + 2 * x3 + x4) * dt / 6 v += (v1 + 2 * v2 + 2 * v3 + v4) * dt / 6 return x, v end function force(x, mass, k) return -x * k / mass end main()

3. Compilation and Execution of Computations

In this comparison, we use gfortran (GNU Fortran) and ifx (Intel Fortran; formerly ifort) as Fortran compilers. GNU Fortran allows specifying optimization options to improve computational speed at compile time. This time, we will measure the computational speeds for four optimization options: O0, O1, O2, and O3, to also investigate the impact of compilation options on computational speed. For Intel Fortran, in addition to these options, we will add and test the xHOST option, which optimizes according to the executing processor.

The Python code (newton.py) is executed in the terminal with python newton.py, and the Julia code (newton.jl) is similarly executed with julia newton.jl in the terminal. Some may wonder about Julia's compilation time when comparing computational speeds with Fortran, but we have confirmed that this does not affect the results of this comparison, so we will mention this point later.

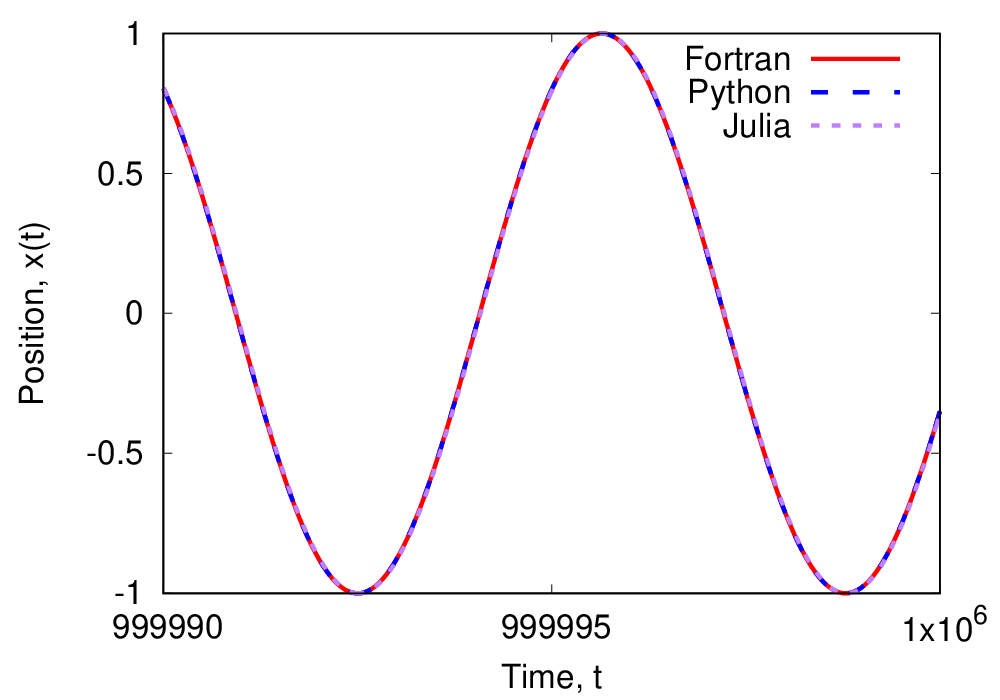

Executing the prepared Fortran code newton.f90, Python code newton.py, and Julia code newton.jl will output files with the results of solving Newton's equation. The figure below shows the time evolution of the position

4. Comparison of Computational Speeds of Fortran, Python, Julia

To compare the computational speeds of Fortran, Python, and Julia, we executed each of the three computation codes five times and measured the computation time for each run. The table below shows the average execution time and standard error obtained from this measurement.

| Execution Time Required for Solving Newton's Equation (Time Evolution of 108 Steps) | |

|---|---|

| Language (Compiler Name, Compile Option) | Execution Time and Standard Error |

| Fortran (gfortran -O0) | 3.61 ± 0.00 seconds |

| Fortran (gfortran -O1) | 1.66 ± 0.00 seconds |

| Fortran (gfortran -O2) | 1.69 ± 0.01 seconds |

| Fortran (gfortran -O3) | 1.70 ± 0.02 seconds |

| Fortran (ifx -O0) [formerly ifort] | 3.60 ± 0.00 seconds |

| Fortran (ifx -O1) [formerly ifort] | 1.16 ± 0.00 seconds |

| Fortran (ifx -O2) [formerly ifort] | 1.15 ± 0.00 seconds |

| Fortran (ifx -O3) [formerly ifort] | 1.15 ± 0.00 seconds |

| Fortran (ifx -O3 -xHOST) [formerly ifort] | 0.92 ± 0.00 seconds |

| Python | 75.33 ± 0.07 seconds |

| Julia | 3.06 ± 0.03 seconds |

From the table above, let's first compare the results for Fortran compilers and compile options. For Fortran compilers, we used the GNU Fortran compiler (gfortran) and Intel Fortran compiler (ifx; formerly ifort). In the case of the GNU Fortran compiler, specifying the -O0 option to disable optimizations results in execution times about 2.2 times longer than when optimizations are enabled. Therefore, in Fortran, enabling optimizations speeds up computations like solving Newton's equation by about 2.2 times. Also, for GNU Fortran results, there is little difference in execution time when optimization options -O1, -O2, -O3 are used. Thus, for simple computations like this one, higher-level optimizations beyond -O1 do not significantly affect computational speed. Comparing Intel Fortran and GNU Fortran results, execution times for the non-optimized case (-O0) are nearly identical, but computation speeds for Intel Fortran become significantly faster than GNU Fortran when performing optimizations of -O1 or higher. Especially, performing -O3 optimization along with -xHOST (optimization according to the processor) makes Intel Fortran's computation speed about 1.8 times faster than GNU Fortran.

Comparing the execution time of Fortran (ifx -O3 -xHOST) with Python, Fortran is about 81.9 times faster than Python. Moreover, comparing Fortran (ifx -O3 -xHOST) with Julia, Fortran is about 3.3 times faster than Julia. Furthermore, comparing the execution time of Julia with Python, Julia is about 24.6 times faster than Python.

5. Thoughts and Comments on the Speed Comparison

From the above comparison, when comparing the execution times of Fortran, Python, and Julia, Python requires an overwhelmingly longer execution time. This seems to be because Python is an interpreted language. However, this does not mean that Python is inferior. Python has its strengths, such as a rich library environment and ease of writing, which reflect why it is widely used by many users today.

Also, there is a common belief that Julia is very fast, but when actually comparing it to Fortran, it takes about 3.3 times longer to execute. It is difficult to evaluate what this 3.3 times figure means, but imagining how you would feel if the code you use in your own research or work took 3.3 times longer to execute might give you some insight into the pros and cons of Julia.

6. A More Serious Comparison Between Fortran and Julia

In the above comparison, the Julia code execution time was measured by running julia newton.jl, so the compilation time is included in the execution time. Therefore, some people might feel that the comparison between Julia and Fortran is not accurate. As an additional analysis, we measured the execution time with the number of time evolution steps increased by tenfold to practically increase the calculation time by tenfold and relatively reduce the impact of Julia's compilation time. For Fortran, comparisons are made using the ifx -O3 -xHOST compile command.

The results of the additional analysis are shown in the table below. Even when comparing Fortran and Julia's computation speeds using the results of calculations with the practical computation amount increased by tenfold, it was verified that Fortran is more than three times faster than Julia.

| Execution Time Required for Solving Newton's Equation (Time Evolution of 109 Steps) | |

|---|---|

| Language (Compiler Name, Compile Option) | Execution Time and Standard Error |

| Fortran (ifx -O3 -xHOST) | 9.44 ± 0.02 seconds |

| Julia | 29.05 ± 0.00 seconds |

7. Investigation of Fortran's Compilation Time

In the previous section [6. A More Serious Comparison Between Fortran and Julia], we confirmed that Julia's compilation time does not significantly affect the speed comparison. But what about Fortran's compilation time? Here, we measure the time it takes to compile the Fortran code mentioned above. The results of the compilation time for each compile option are shown below.

| Compilation Time for gfortran | |

|---|---|

| Compiler, Compile Option | Compilation Time |

| gfortran -O0 | 0.037 seconds |

| gfortran -O1 | 0.039 seconds |

| gfortran -O2 | 0.042 seconds |

| gfortran -O3 | 0.042 seconds |

| ifx -O0 | 0.057 seconds |

| ifx -O1 | 0.066 seconds |

| ifx -O2 | 0.072 seconds |

| ifx -O3 | 0.072 seconds |

| ifx -O3 -xHOST | 0.073 seconds |

8. About the Comparison's Execution Environment

This verification was performed in the following environment on December 28, 2023.

- CPU: Intel(R) Core(TM) i5-1145G7 @ 2.60GHz

- OS: Ubuntu 20.04.6

- GNU Fortran: gfortran version 9.4.0

- Intel Fortran: ifx 2024.0.2 20231213

- Python: Python 3.8.10

- Julia: Julia version 1.7.3

[Back to Research and Verification Home]